Python Web Scraping Tool

Introduction

Web scraping is a technique employed to extract a large amount of data from websites and format it for use in a variety of applications. Web scraping allows us to automatically extract data and present it in a usable configuration, or process and store the data elsewhere. The data collected can also be part of a pipeline where it is treated as an input for other programs.

The incredible amount of data on the Internet is a rich resource for any field of research or personal interest. To effectively harvest that data, you’ll need to become skilled at web scraping.The Python libraries requests and Beautiful Soup are powerful tools for the job. If you like to learn with hands-on examples and you have a basic understanding of Python and HTML, then this tutorial is.

In the past, extracting information from a website meant copying the text available on a web page manually. This method is highly inefficient and not scalable. These days, there are some nifty packages in Python that will help us automate the process! In this post, I’ll walk through some use cases for web scraping, highlight the most popular open source packages, and walk through an example project to scrape publicly available data on Github.

Web Scraping Use Cases

- Manually Opening a Socket and Sending the HTTP Request. The most basic way to perform.

- Jun 25, 2019 What is serverless computing? Serverless is an approach to computing that offloads responsibility for common infrastructure management tasks (e.g., scaling, scheduling, patching, provisioning, etc.) to cloud providers and tools, allowing engineers to focus their time and effort on the business logic specific to their applications or process.

Web scraping is a powerful data collection tool when used efficiently. Some examples of areas where web scraping is employed are:

- Search: Search engines use web scraping to index websites for them to appear in search results. The better the scraping techniques, the more accurate the results.

- Trends: In communication and media, web scraping can be used to track the latest trends and stories since there is not enough manpower to cover every new story or trend. With web scraping, you can achieve more in this field.

- Branding: Web scraping also allows communications and marketing teams scrape information about their brand’s online presence. By scraping for reviews about your brand, you can be aware of what people think or feel about your company and tailor outreach and engagement strategies around that information.

- Machine Learning: Web scraping is extremely useful in mining data for building and training machine learning models.

- Finance: It can be useful to scrape data that might affect movements in the stock market. While some online aggregators exist, building your own collection pool allows you to manage latency and ensure data is being correctly categorized or prioritized.

Tools & Libraries

There are several popular online libraries that provide programmers with the tools to quickly ramp up their own scraper. Some of my favorites include:

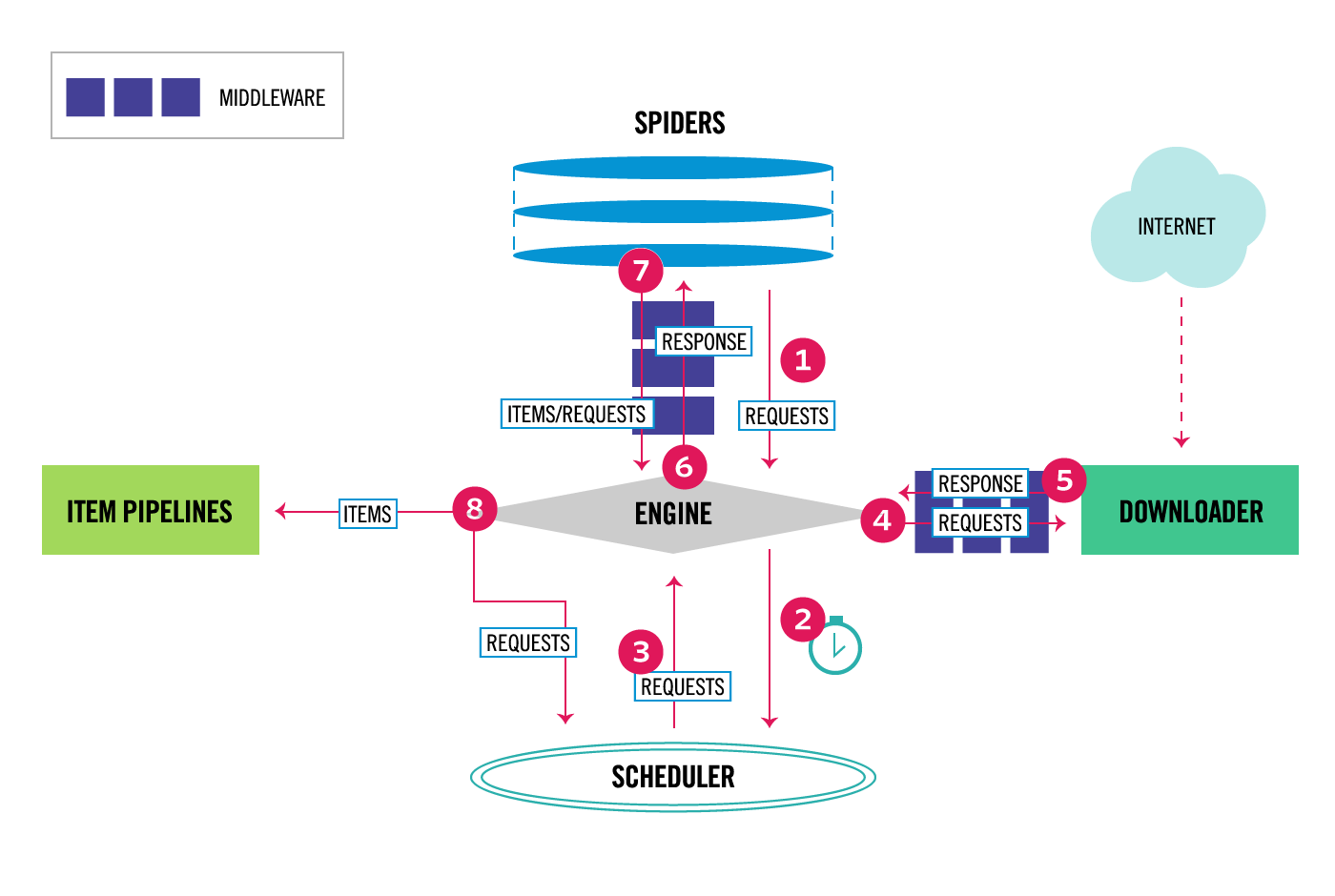

Requests– a library to send HTTP requests, which is very popular and easier to use compared to the standard library’surllib.BeautifulSoup– a parsing library that uses different parsers to extract data from HTML and XML documents. It has the ability to navigate a parsed document and extract what is required.Scrapy– a Python framework that was originally designed for web scraping but is increasingly employed to extract data using APIs or as a general purpose web crawler. It can also be used to handle output pipelines. Withscrapy, you can create a project with multiple scrapers. It also has a shell mode where you can experiment on its capabilities.lxml– provides python bindings to a fast html and xml processing library calledlibxml. Can be used discretely to parse sites but requires more code to work correctly compared toBeautifulSoup. Used internally by theBeautifulSoupparser.Selenium– a browser automation framework. Useful when parsing data from dynamically changing web pages when the browser needs to be imitated.

| Library | Learning curve | Can fetch | Can process | Can run JS | Performance |

requests | easy | yes | no | no | fast |

BeautifulSoup4 | easy | no | yes | no | normal |

lxml | medium | no | yes | no | fast |

Selenium | medium | yes | yes | yes | slow |

Scrapy | hard | yes | yes | no | normal |

Using the Beautifulsoup HTML Parser on Github

We’re going to use the BeautifulSoup library to build a simple web scraper for Github. I chose BeautifulSoup because it is a simple library for extracting data from HTML and XML files with a gentle learning curve and relatively little effort required. It provides handy functionality to traverse the DOM tree in an HTML file with helper functions.

Requirements

In this guide, I will expect that you have a Unix or Windows-based machine. You might want to install Kite for smart autocompletions and in-editor documentation while you code. You are also going to need to have the following installed on your machine:

- Python 3

BeautifulSoup4Library

Profiling the Webpage

We first need to decide what information we want to gather. In this case, I’m hoping to fetch a list of a user’s repositories along with their titles, descriptions, and primary programming language. To do this, we will scrape Github to get the details of a user’s repositories. While this information is available through Github’s API, scraping the data ourselves will give us more control over the format and thoroughness of the end data.

Python Web Scraping Beautifulsoup

Once that’s done, we’ll profile the website to see where our target information is located and create a plan to retrieve it.

To profile the website, visit the webpage and inspect it to get the layout of the elements.

Let’s visit Guido van Rossum’s Github profile as an example and view his repositories:

- The

divcontaining the list of repos From the screenshot above, we can tell that a user’s list of repositories is located in adivcalleduser-repositories-list, so this will be the focus of our scraping. This div contains list items that are the list of repositories. - List item that contains a single repo’s info / relevant info on DOM tree The next part shows us the location of a single list item that contains a single repository’s information. We can also see this section as it appears on the DOM tree.

- Location of the repository’s name and link Inside a single list item, there is a

hreflink that contains a repository’s name and link. - Location of repository’s description

- Location of repository’s language

For our simple scraper, we will extract the repo name, description, link, and the programming language.

Scraper Setup

We’ll first set up our virtual environment to isolate our work from the rest of the system, then activate the environment. Type the following commands in your shell or command prompt:mkdir scraping-example

cd scraping-example

If you’re using a Mac, you can use this command to active the virtual environment:python -m venv venv-scraping

On Windows the virtual environment is activated by the following command:venv-scrapingScriptsactivate.bat

Finally, install the required packages:pip install bs4 requests

The first package, requests, will allow us to query websites and receive the websites HTML content as rendered on the browser. It is this HTML content that our scraper will go through and find the information we require.

Python Web Scraping Tool Online

The second package, BeautifulSoup4, will allow us to go through the HTML content, then locate and extract the information we require. It allows us to search for content by HTML tags, elements, and class names using Python’s inbuilt parser module.

The Simple Scraper Function

Our function will query the website using requests and return its HTML content.

The next step is to use BeautifulSoup library to go through the HTML and extract the div that we identified contains the list items within a user’s repositories. We will then loop through the list items and extract as much information from them as possible for our use.

You may have noticed how we extracted the programming language. BeautifulSoup does not only allow us to search for information using HTML elements but also using attributes of the HTML elements. This is a simple trick to enhance accuracy when working with programming-related data sets.

That’s it! You have successfully built your Github Repository Scraper and can test it on a bunch of other users’ repositories. You can check out Kite’s Github repository to easily access the code from this post and others from their Python series.

Now that you’ve built this scraper, there are myriad possibilities to enhance and utilize it. For example, this scraper can be modified to send a notification when a user adds a new repository. This would enable you to be aware of a developer’s latest work. (Remember when I mentioned that scraping tools are useful in finance? Maintaining your own scraper and setting up notifications for new data would be very useful in that setting).

Another idea would be to build a browser extension that displays a user’s repositories on hover at any page on Github. The scraper would feed data into an API that serves the extension. This data will be then served and displayed on the extension. You can also build a comparison tool for Github users based on the data you scrape, creating a ranking based on how actively users update their repositories or using keyword detection to find repositories that are relevant to you.

What’s Next?

We covered the basics of web scraping in this post and only touched a few of the many use cases for it. requests and beautifulsoup are powerful and relatively simple tools for web scraping, but you can also check out some of the more advanced libraries I highlighted at the beginning of the post for even more functionality. The next steps would be to build more complex scrapers that could be made of multiple scraping functions from many different sources. There are endless ways these scrapers can be integrated into any project that would benefit from data that’s publicly available on the web. Eventually, you’ll have so many web scraping functions running that you’ll have to start thinking about moving your computation to a home server or the cloud!

This post is a part of Kite’s new series on Python. You can check out the code from this and other posts on our GitHub repository.

Company

Product

Resources

Stay in touch

Get Kite updates & coding tips

What is serverless computing?

Serverless is an approach to computing that offloads responsibility for common infrastructure management tasks (e.g., scaling, scheduling, patching, provisioning, etc.) to cloud providers and tools, allowing engineers to focus their time and effort on the business logic specific to their applications or process.

The most useful way to define and understand serverless is focusing on the handful of core attributes that distinguish serverless computing from other compute models, namely:

- The serverless model requires no management and operation of infrastructure, enabling developers to focus more narrowly on code/custom business logic.

- Serverless computing runs code only on-demand on a per-request basis, scaling transparently with the number of requests being served.

- Serverless computing enables end users to pay only for resources being used, never paying for idle capacity.

Serverless is fundamentally about spending more time on code, less on infrastructure.

Are there servers in serverless computing?

The biggest controversy associated with serverless computing is not around its value, use cases, or which vendor offerings are the right fit for which jobs, but rather the name itself. An ongoing argument around serverless is that the name is not appropriate because there are still servers in serverless computing.

Why the name “serverless” has persisted is because the name is describing an end user’s experience. In a technology that is described as “serverless,” the management needs of the underlying servers are invisible to the end user. The servers are still there, you just don’t see them or interact with them.

Serverless vs. FaaS

Serverless and Functions-as-a-Service (FaaS) are often conflated with one another but the truth is that FaaS is actually a subset of serverless. As mentioned above, serverless is focused on any service category, be it compute, storage, database, etc. where configuration, management, and billing of servers are invisible to the end user. FaaS, on the other hand, while perhaps the most central technology in serverless architectures, is focused on the event-driven computing paradigm wherein application code, or containers, only run in response to events or requests.

Serverless architectures pros and cons

Pros

While there are many individual technical benefits of serverless computing, there are four primary benefits of serverless computing:

- It enables developers to focus on code, not infrastructure.

- Pricing is done on a per-request basis, allowing users to pay only for what they use.

- For certain workloads, such as ones that require parallel processing, serverless can be both faster and more cost-effective than other forms of compute

- Serverless application development platforms provide almost total visibility into system and user times and can aggregate the information systematically.

Top Python Web Scraping Tools

Cons

While there is much to like about serverless computing, there are some challenges and trade-offs worth considering before adopting them:

- Long-running processes: FaaS and serverless workloads are designed to scale up and down perfectly in response to workload, offering significant cost savings for spiky workloads. But for workloads characterized by long-running processes, these same cost advantages are no longer present and managing a traditional server environment might be simpler and more cost-effective.

- Vendor lock-in: Serverless architectures are designed to take advantage of an ecosystem of managed cloud services and, in terms of architectural models, go the furthest to decouple a workload from something more portable, like a VM or a container. For some companies, deeply integrating with the native managed services of cloud providers is where much of the value of cloud can be found; for other organizations, these patterns represent material lock-in risks that need to be mitigated.

- Cold starts: Because serverless architectures forgo long-running processes in favor of scaling up and down to zero, they also sometimes need to start up from zero to serve a new request. For certain applications, this delay isn’t much of an impact, but for something like a low-latency financial application, this delay wouldn’t be acceptable.

- Monitoring and debugging: These operational tasks are challenging in any distributed system, and the move to both microservices and serverless architectures (and the combination of the two) has only exacerbated the complexity associated with managing these environments carefully.

Understanding the serverless stack

Defining serverless as a set of common attributes, instead of an explicit technology, makes it easier to understand how the serverless approach can manifest in other core areas of the stack.

- Functions as a Service (FaaS): FaaS is widely understood as the originating technology in the serverless category. It represents the core compute/processing engine in serverless and sits in the center of most serverless architectures. See 'What is FaaS?' for a deeper dive into the technology.

- Serverless databases and storage: Databases and storage are the foundation of the data layer. A “serverless” approach to these technologies (with object storage being the prime example within the storage category) involves transitioning away from provisioning “instances” with defined capacity, connection, and query limits and moving toward models that scale linearly with demand, in both infrastructure and pricing.

- Event streaming and messaging: Serverless architectures are well-suited for event-driven and stream-processing workloads, which involve integrating with message queues, most notably Apache Kafka.

- API gateways: API gateways act as proxies to web actions and provide HTTP method routing, client ID and secrets, rate limits, CORS, viewing API usage, viewing response logs, and API sharing policies.

Comparing FaaS to PaaS, containers, and VMs

While Functions as a Service (FaaS), Platform as a Service (PaaS), containers, and virtual machines (VMs) all play a critical role in the serverless ecosystem, FaaS is the most central and most definitional; and because of that. Kawaii deathu desu. it’s worth exploring how FaaS differs from other common models of compute on the market today across key attributes:

- Provisioning time: Milliseconds, compared to minutes and hours for the other models.

- Ongoing administration: None, compared to a sliding scale from easy to hard for PaaS, containers, and VMs respectively.

- Elastic scaling: Each action is always instantly and inherently scaled, compared to the other models which offer automatic—but slow—scaling that requires careful tuning of auto-scaling rules.

- Capacity planning: None required, compared to the other models requiring a mix of some automatic scaling and some capacity planning.

- Persistent connections and state: Limited ability to persist connections and state must be kept in external service/resource. The other models can leverage http, keep an open socket or connection for long periods of time, and can store state in memory between calls.

- Maintenance: All maintenance is managed by the FaaS provider. This is also true for PaaS; containers and VMs require significant maintenance that includes updating/managing operating systems, container images, connections, etc.

- High availability (HA) and disaster recovery (DR): Inherent in the FaaS model with no extra effort or cost. The other models require additional cost and management effort. In the case of both VMs and containers, infrastructure can be restarted automatically.

- Resource utilization: Resources are never idle—they are invoked only upon request. All other models feature at least some degree of idle capacity.

- Resource limits: FaaS is the only model that has resource limits on code size, concurrent activations, memory, run length, etc.

- Charging granularity and billing: Per blocks of 100 milliseconds, compared to by the hour (and sometimes minute) of other models.

Use cases and reference architectures

Given its unique combination of attributes and benefits, serverless architectures are well-suited for use cases around data and event processing, IoT, microservices, and mobile backends.

Serverless and microservices

The most common use case of serverless today is supporting microservices architectures. The microservices model is focused on creating small services that do a single job and communicate with one another using APIs. While microservices can also be built and operated using either PaaS or containers, serverless has gained significant momentum given its attributes around small bits of codes that do one thing, inherent and automatic scaling, rapid provisioning, and a pricing model that never charges for idle capacity.

API backends

Any action (or function) in a serverless platform can be turned into a HTTP endpoint ready to be consumed by web clients. When enabled for web, these actions are called web actions. Once you have web actions, you can assemble them into a full-featured API with an API Gateway that brings additional security, OAuth support, rate limiting, and custom domain support.

For hands-on experience with API backends, try the tutorial “Serverless web application and API.”

Data processing

Serverless is well-suited to working with structured text, audio, image, and video data around tasks that include the following:

- Data enrichment, transformation, validation, cleansing

- PDF processing

- Audio normalization

- Image rotation, sharpening, and noise reduction

- Thumbnail generation

- Image OCR’ing

- Applying ML toolkits

- Video transcoding

For a detailed example, read “How SiteSpirit got 10x faster, at 10% of the cost.”

Massively parallel compute/“Map” operations

Any kind of embarrassingly parallel task is very well-suited to be run on a serverless runtime. Each parallelizable task results in one action invocation. Possible tasks include the following:

- Data search and processing (specifically Cloud Object Storage)

- Map(-Reduce) operations

- Monte Carlo simulations

- Hyperparameter tuning

- Web scraping

- Genome processing

For a detailed example, read 'How a Monte Carlo simulation ran over 160x faster on a serverless architecture vs. a local machine.'

Stream processing workloads

Combining managed Apache Kafka with FaaS and database/storage offers a powerful foundation for real-time buildouts of data pipelines and streaming apps. These architectures are ideally suited for working with all sorts of data stream ingestions (for validation, cleansing, enrichment, transformation), including:

- Business data streams (from other data sources)

- IoT sensor data

- Log data

- Financial (market) data

Get started with tutorials on serverless computing

Expand your serverless computing skills with these tutorials:

- Quick lab: No infrastructure, just code. See the simplicity of serverless: In this 45-minute lab, you'll create an IBM Cloud account and then use Node.js to create an action, an event-based trigger, and a web action.

- Getting started with IBM Cloud Functions: In this tutorial, learn how to create actions in the GUI and CLI.

- Quickly and easily run your Python code at scale: This tutorial teaches you how to use PyWren, a tool that enables Python developers to scale Python code. You'll learn to set up and use PyWren with IBM Cloud Functions.

- Go serverless with PHP: Experienced PHP developers will learn about serverless PHP. The tutorial covers using IBM Cloud Functions CLI to provision PHP actions, how to invoke PHP actions over HTTP, and how to integrate PHP actions with third-party REST APIs and IBM Cloud services.

Serverless and IBM Cloud

A serverless computing model offers a simpler, more cost-effective way of building and operating applications in the cloud. And it can help smooth the way as you modernize your applications on your journey to cloud.

Best Python Web Scraper

Take the next step:

- Learn about IBM Cloud Code Engine, a pay-as-you-use serverless platform based on Red Hat OpenShift that lets developers deploy their apps using source code, container images or creating batch jobs with no Kubernetes skills needed.

- Explore other IBM products and tools that can be used with IBM Cloud Code Engine, including IBM Watson APIs, Cloudant, Object Storage, and Container Registry.

Scrapy

Get started with an IBM Cloud account today.